Pick and Place

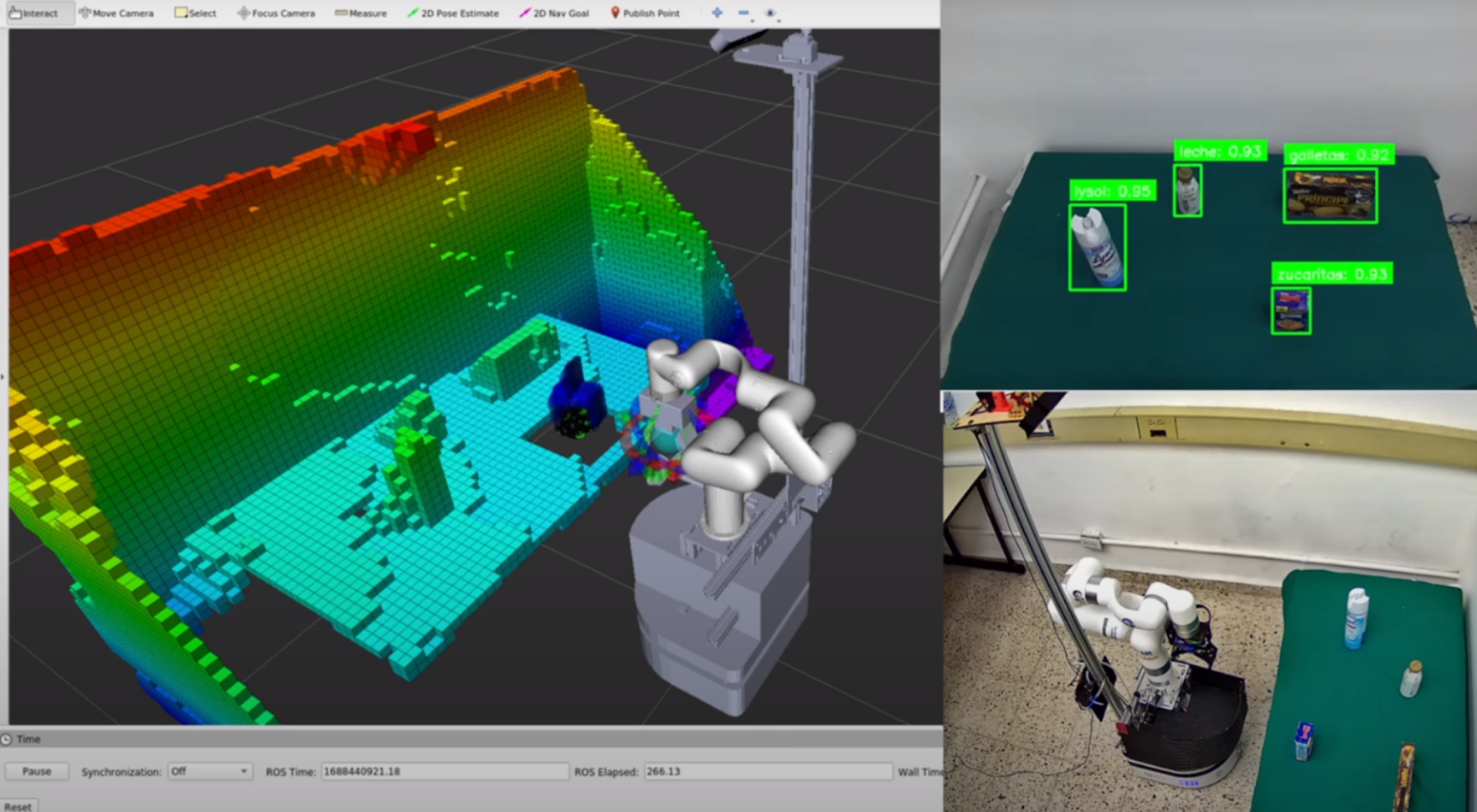

The process starts with vision information from 2D object detection nodes as well as 3D point cloud information being processed both for octomap generation and object extraction. The robot uses standard OMPL algorithms from MoveIt! for motion planning but the action servers for grasping and placing objects were developed from scratch, specific to the robot capabilities.

Picking objects

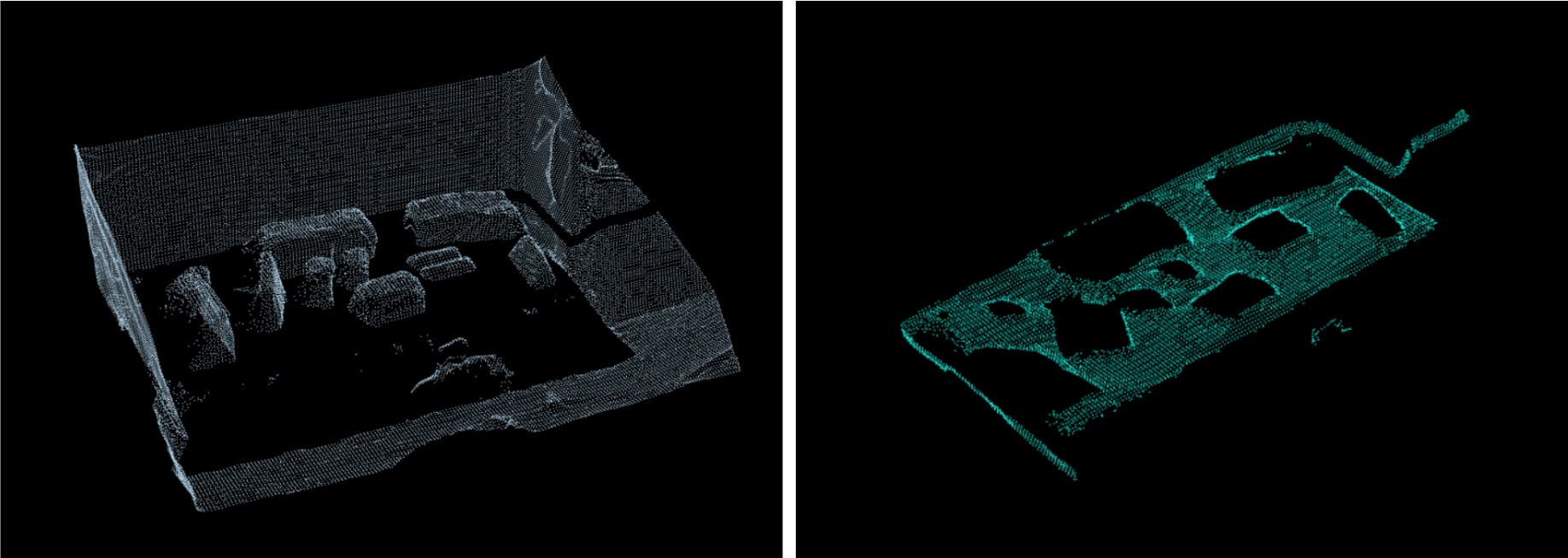

Once a requested object is detected from the live image, a RANSAC algorithm is used to to find the table the robot is looking at and cuts it off from the pointcloud, which leaves the object meshes available for identification. This process has proven to be highly consistent in obtaining clean meshes with an adequate speed, and has been the object 3D extraction solution since the AirLab Stacking Challenge.

A Grasping Pose Detector (GPD) model is then used to obtain possible gripper positions to pick the object matched with the 2D detection position, and MoveIt is used to plan the trajectory considering the robot itself and the environment (through a live octomap) to avoid collisions.

Placing objects

Considering the possibilities of non-empty tables where the robot should place objects, two different methods have been developed to build possible placing positions.

The first approach is a language-level C++ algorithm that finds the best spot for a place position. The development for this solution uses only STL libraries within the language and not any other 3rd party libraries for the processing.

To avoid inefficient run times, each real-world 3D point targeted is processed using a hash function to be mapped into sections of 5cm. Initially, a boolean matrix is defined as being true if the robot can reach a spot, and false otherwise.

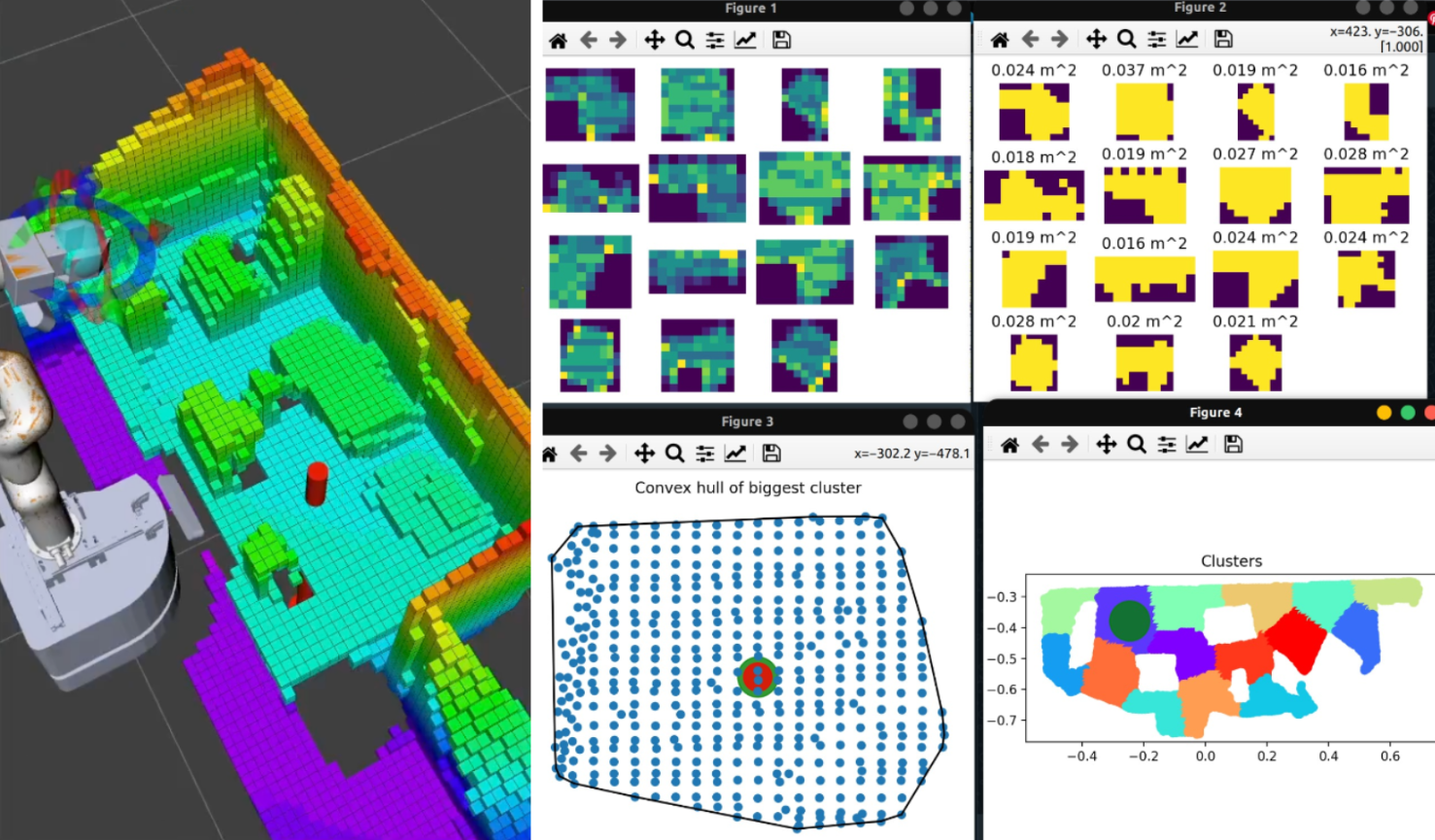

RANSAC is used again to find the biggest table in the robot vision and creates a new boolean matrix with the table limits and the hash function described previously. A new possible placings matrix is generated by comparing and finding the intersection between the robot possible positions and the table points.

After finding the table, PCL is used to find the clusters of each object above the table, these slots in the possible placings matrix are removed after mapping the objects points.

When all the 3D objects have been processed, the boolean possible placings matrix is complete. A prefix sum area matrix is then computed by following the simple formulae of:

Each position in this integer matrix represents the number of slots of 5cm available for placing before it.

As a placing near the center of the robot is preferred, a Breadth First Search algorithm is run in this prefix sum area matrix, when it find a position with area of minimum 1.5x the surface area of the object currently held, it returns the center of this area, remapped with the hash function reversed, as a Pose Stamped for the placing position.

Then, MoveIt is used for the planning of the trajectory towards the Pose given. When the position is reached, it opens the gripper, placing the object.

The second solution aims at solving scenarios with more complex table and object positions and angles relative to the robot, as it considers all points in the pointcloud as they are. First, the point cloud is filtered to only consider the area reachable by the Xarm6, and executes the RANSAC to obtain only the table point cloud, which is sent to a clustering server for processing. It also considers the objects observed in the filtered area and also sends the number of objects present in that area of the table. The server processes the point cloud received as a 2D scatter plot, over which it runs a k-means clustering algorithm, which resulting clusters are used to evaluate the area with the most available space. The number of clusters generated is proportional to the number of objects in the area observed. A factor for multiplication is added so that, for every object, N number of clusters is generated so that different clusters are generated around each object. Currently, 4 clusters per object are analyzed, following that for every object a cluster is generated in each cardinal direction.

After every cluster is generated, the one with the largest area is selected. The area is calculated through generation of a point density histogram for each cluster, which divides the plot on a size proportional grid and uses a minimum pixel density threshold to count for occupied and unoccupied areas of the histogram. The largest cluster obtained then has its centroid calculated and is sent as a 3D pose for object placing.